Sara Saffari Deepfake: What You NEED To Know!

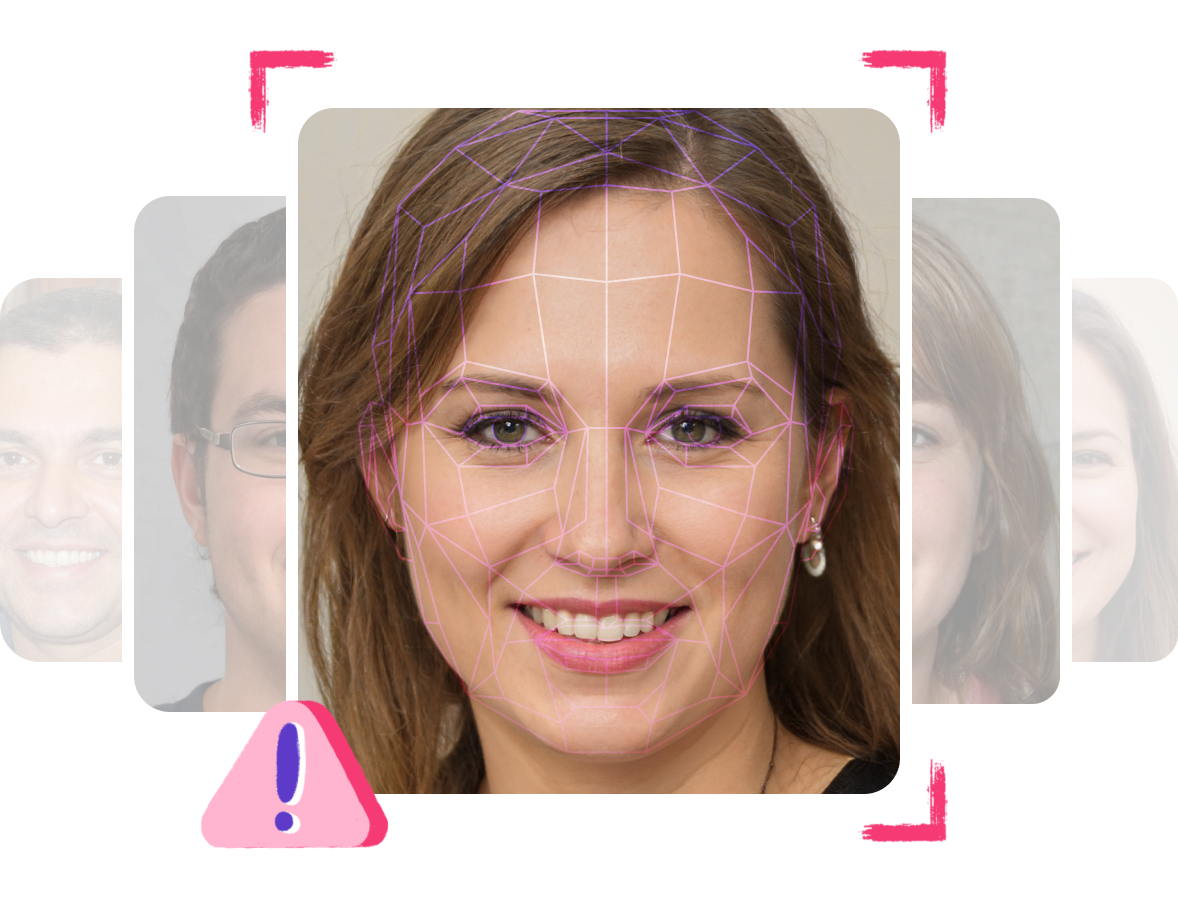

Is the digital facade of "Sara Saffari" truly crumbling before our eyes, or is this just another illusion woven by the relentless march of technology? The emergence of "Sara Saffari deepfake" represents a chilling escalation in the manipulation of reality, forcing us to confront the unsettling potential of artificial intelligence to blur the lines between what is authentic and what is fabricated.

The term "Sara Saffari deepfake" has rapidly permeated the digital landscape, sparking a flurry of concern and debate. It conjures images of sophisticated, AI-generated content, meticulously crafted to impersonate an individual, often with malicious intent. This goes beyond simple photo editing or crude video manipulation; deepfakes leverage advanced machine learning algorithms to create disturbingly realistic simulations, capable of mimicking a person's appearance, voice, and even mannerisms. The implications are far-reaching, touching upon issues of privacy, reputation, and the very fabric of trust in the digital age. The focus here is on the potential of such technology being applied to an individual known as "Sara Saffari," which is a hypothetical case, but the principles remain consistent, the concern is not regarding the person, but the application of deepfake technology for any individual.

| Category | Details |

|---|---|

| Subject's Name: | Sara Saffari (Hypothetical) |

| Age: | Not Applicable (Hypothetical) |

| Nationality: | Not Applicable (Hypothetical) |

| Known For: | Subject of Deepfake Content (Hypothetical) |

| Current Status: | Not Applicable (Hypothetical, assumes the person is the subject of deepfake content and not a public figure in a traditional sense.) |

| Potential Areas of Impact: | Privacy violation, reputation damage, spread of misinformation, emotional distress. |

| Concerns: | Use of AI technology for malicious purposes, potential for widespread disinformation, erosion of trust in digital content. |

| Ethical Considerations: | Impact on individual's reputation and well-being, societal implications of manipulated media, need for regulation of deepfake technology. |

| Professional Context: | Could involve the subject's career and professional standing, leading to potential damage and creating obstacles. |

| Legal Aspects: | Potential defamation, invasion of privacy, possible legal recourse against deepfake creators, depending on the content and jurisdiction. |

| Reference: | USA Today - What are deepfakes? How do they work? (This link provides general information about deepfakes and is for informational purposes only and does not relate to "Sara Saffari" directly) |

The very essence of "Sara Saffari deepfake" lies in the unsettling capacity of AI to replicate the persona of an individual. This imitation can take many forms: videos showcasing "Sara Saffari" seemingly saying or doing things she never did; audio recordings that mimic her voice, conveying fabricated statements; or images that place her in situations entirely of the creator's imagination. The technology behind this generative adversarial networks (GANs) and other deep learning models has advanced to the point where the distinction between reality and fabrication is becoming increasingly blurred. The consequences of this advancement are profound. Consider the potential for spreading misinformation, damaging reputations, or even influencing elections. The ability to create believable yet entirely false content poses a significant threat to individuals and society as a whole. The focus here is not on the person but the phenomenon, we take "Sara Saffari" as example to understand this.

The implications extend beyond mere technological novelty. Imagine the potential for reputational damage. A deepfake video, expertly crafted to portray "Sara Saffari" in a compromising situation, could go viral, impacting her personal and professional life. The speed and reach of social media amplify the potential for such content to spread rapidly, making it incredibly difficult to contain the damage. Correcting the record, debunking the false narrative, can be an uphill battle against the relentless tide of online content. This is the chilling reality presented by "Sara Saffari deepfake" the possibility of a person's identity being weaponized for malicious purposes.

The ethical considerations surrounding "Sara Saffari deepfake" are equally complex. Where does the responsibility lie when such content is created and disseminated? Should tech companies bear some responsibility for preventing the spread of deepfakes on their platforms? What legal recourse exists for victims? The current legal and ethical frameworks are struggling to keep pace with the rapid advancements in AI technology. Existing laws regarding defamation, privacy, and impersonation may need to be re-evaluated and updated to address the unique challenges posed by deepfakes. The very nature of truth itself is under scrutiny. What can we believe when any video, any image, or any audio recording could be a fabrication?

The proliferation of "Sara Saffari deepfake" scenarios also raises serious questions about digital literacy. How can individuals be empowered to discern between authentic content and fabricated content? Education becomes a crucial weapon in the fight against misinformation. People must learn to critically evaluate what they see and hear online, to question the sources of information, and to be wary of content that seems too good (or too bad) to be true. This requires a multi-pronged approach, involving educational institutions, tech companies, and individuals. Vigilance and critical thinking are paramount in this new digital landscape. It also means questioning the source, the motivation and the methods of the content.

The creation of a "Sara Saffari deepfake" isn't just about technological capabilities; it's also about the motivations of those who wield such power. What drives someone to create and disseminate deepfake content? Is it for financial gain, political manipulation, or simply the desire to cause harm? Understanding the motivations behind deepfake creation is crucial to addressing the problem effectively. Law enforcement agencies and researchers need to investigate the origins and purposes of these creations. This includes forensic analysis to identify the source of the content, to understand the intended audience, and to identify the potential harm.

The impact of "Sara Saffari deepfake" extends to the erosion of trust in media and institutions. If videos and audio recordings can no longer be relied upon as accurate representations of reality, how can we trust the news, the legal system, or even our own observations? This breakdown of trust can have profound consequences for democracy, public discourse, and social cohesion. The ability to fabricate convincing content can be used to undermine political opponents, spread propaganda, and create a climate of distrust. This makes the work of real journalists, who are fighting the misinformation, harder.

Technological solutions are also emerging in the fight against deepfakes. Researchers are developing tools and techniques to detect and identify manipulated content. These technologies, which use a variety of approaches, are designed to analyze the subtle imperfections in deepfake videos, such as inconsistencies in lighting, facial expressions, and lip-syncing. The goal is to create methods to identify deepfakes and to alert the public to manipulated content. It also involves educating the public to recognise the signs of deepfakes, to be alert to unusual content and to be wary of any content that seems suspicious.

Legislation and regulation are crucial components of the response to the threat posed by "Sara Saffari deepfake". Laws need to be created or updated to address the creation, dissemination, and use of deepfakes. This might involve criminalizing the malicious creation and distribution of deepfakes, establishing civil penalties for those who create and spread false content, and creating mechanisms to protect individuals from the harm caused by deepfakes. These laws would need to be balanced to protect free speech and to avoid unintentionally stifling legitimate uses of AI technology. International cooperation will also be essential, as deepfakes can easily transcend national borders.

The hypothetical case of "Sara Saffari deepfake" underscores the importance of proactive measures. It's not enough to react to deepfakes after they appear; it is also necessary to implement proactive measures to prevent them from being created in the first place. This includes educating the public, promoting digital literacy, investing in research and development of deepfake detection technologies, and working with tech companies to develop strategies for identifying and removing deepfakes from their platforms. This requires a collaborative effort involving governments, tech companies, researchers, and individuals. The future of reality itself depends on it.

The potential for deepfakes to be used in political campaigns is a serious concern. "Sara Saffari deepfake," if applied to a politician, could be used to create fabricated videos of them making controversial statements, engaging in unethical conduct, or endorsing policies they do not support. Such content could have a significant impact on elections and public opinion. This underscores the urgency of establishing regulations to prevent the use of deepfakes for political manipulation. The aim is to protect the integrity of the electoral process and to ensure that voters make decisions based on accurate information. It is paramount to guarantee that political discourse is based on facts and not fabricated content.

One of the most disturbing aspects of "Sara Saffari deepfake" is the potential for it to be used for revenge, harassment, or extortion. Imagine a deepfake video created to humiliate or embarrass "Sara Saffari," or to damage her relationships. This could have devastating consequences for her reputation, her mental health, and her personal safety. The fear of this possibility will create a climate of fear and distrust. Legislation and regulation are crucial to protect potential victims and to ensure accountability for those who create and disseminate such content.

The term "Sara Saffari deepfake" represents not only a technological challenge but also a moral one. It requires us to consider our individual and collective responsibilities in the digital age. Are we prepared to critically assess the information we consume online? Are we prepared to question the authenticity of videos, images, and audio recordings? Are we prepared to report the instances of possible deepfakes? The rise of deepfakes is a wake-up call. It forces us to question the very nature of reality and to be more vigilant about what we see and hear.

The fight against "Sara Saffari deepfake" is not just about preventing the creation and dissemination of manipulated content; it's also about preserving trust in our institutions, protecting individual reputations, and maintaining the integrity of our information ecosystem. The stakes are high, and the challenges are complex. Success will require a concerted effort from all sectors of society, from governments and tech companies to educators and individuals. The future of truth itself may depend on the actions that we take now.