Mrdeepfack Scares: What You MUST Know Now!

Is it possible to manufacture reality? The emergence of "mrdeepfack" has brought this unsettling question to the forefront, challenging our perceptions and forcing us to confront the evolving landscape of digital deception. The ease with which manipulated content can now be created and disseminated presents a profound threat, not only to individual reputations but to the very foundations of trust in information itself.

The term "mrdeepfack" encapsulates a phenomenon rapidly reshaping our media consumption. It represents the sophisticated manipulation of digital content, primarily through the application of deep learning technologies. These technologies, capable of generating hyperrealistic images and videos, are employed to create convincing, yet entirely fabricated, narratives. The implications are far-reaching, impacting everything from political discourse and celebrity culture to financial markets and personal relationships. We are at a critical juncture, where the lines between the real and the simulated are becoming increasingly blurred, demanding a critical re-evaluation of how we perceive and interact with information online.

The specific identity and activities linked to the moniker "mrdeepfack" are subject to ongoing investigation. Initial reports suggest a focus on the creation and distribution of highly realistic, yet fabricated, content. This content often targets individuals, organizations, or public figures, with the aim of influencing public opinion, damaging reputations, or even generating financial gain. The anonymity afforded by the internet, coupled with the sophistication of the technology, makes identifying and holding accountable those behind such activities a significant challenge. The impact of "mrdeepfack" extends beyond the superficial; it actively erodes the credibility of sources, fuels mistrust, and can be deployed to silence dissent or spread misinformation on a vast scale. The creation of such content often involves advanced techniques, from facial manipulation and voice cloning to the meticulous crafting of supporting narratives designed to enhance the credibility of the fabricated material.

The motivation behind activities associated with "mrdeepfack" is multifaceted. Financial gain, through extortion, fraud, or the manipulation of markets, is a common driver. Political agendas, aiming to discredit opponents or spread propaganda, also play a significant role. Moreover, the creation of such content can be motivated by personal vendettas, the desire to inflict harm, or simply the thrill of manipulating others. Understanding the motivations is critical to developing effective strategies for countering the threat, from improving detection technologies to educating the public about the risks and promoting responsible online behavior. The ease with which these technologies can be accessed and deployed has lowered the barrier to entry, leading to a proliferation of manipulated content and a corresponding rise in the complexity of identifying and mitigating its effects. The development of sophisticated detection tools, capable of identifying manipulated content with a high degree of accuracy, is a priority for cybersecurity experts and law enforcement agencies worldwide.

The implications for individuals are particularly concerning. The potential for reputational damage is significant, with the ability to disseminate fabricated content online capable of ruining careers and personal lives. Victims of such attacks often face an uphill battle in clearing their names, as the speed and virality of online content make it difficult to control the narrative. Furthermore, the psychological impact can be devastating, leaving victims feeling violated, vulnerable, and isolated. The legal and ethical dimensions of such activities are complex, with existing laws often struggling to keep pace with the rapid advancements in technology. Defining the boundaries of acceptable online behavior, protecting individual rights, and holding perpetrators accountable are key challenges for policymakers and legal professionals alike. The development of international cooperation and standardized legal frameworks is essential to effectively address the transnational nature of these threats.

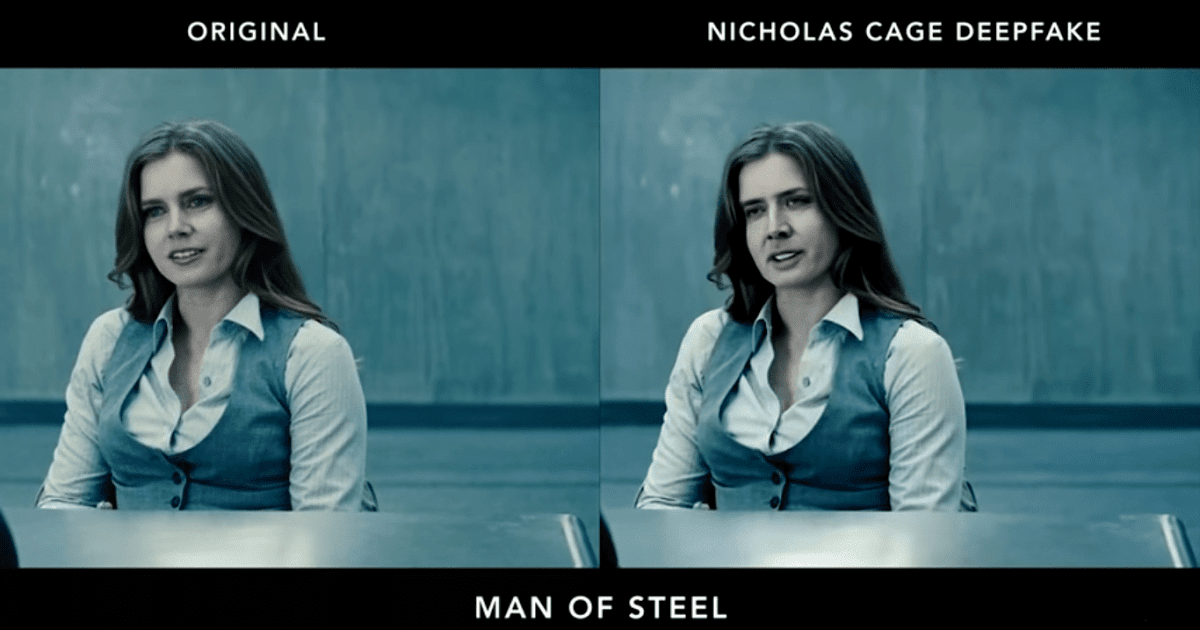

The use of deep learning in the context of "mrdeepfack" refers to the application of artificial intelligence algorithms, trained on vast datasets, to generate highly realistic, yet artificial, content. These algorithms are capable of learning intricate patterns and features, allowing them to create convincing replicas of individuals' faces, voices, and even their movements. The resulting deepfakes can be used to convincingly portray individuals in situations they never experienced, leading to a blurring of the lines between reality and fabrication. The technology is constantly evolving, with increasingly sophisticated techniques emerging that make detection ever more challenging. Understanding the technical underpinnings is crucial to appreciating the scale of the challenge and developing effective countermeasures. The development of such technologies also raises ethical questions about the responsible use of AI and the potential for unintended consequences.

The methods employed by those associated with "mrdeepfack" are diverse and evolving. They often involve a combination of techniques, from the use of open-source software and readily available tools to the development of custom algorithms and sophisticated manipulation techniques. The level of technical expertise varies, but the common denominator is the ability to leverage technology to create convincing fabrications. The use of social engineering techniques to gather information about targets, such as their online activity, personal relationships, and public statements, often plays a crucial role in creating more believable deepfakes. The ability to blend seamlessly with existing online content and exploit pre-existing biases further enhances the effectiveness of these attacks. Countering these methods requires a multi-pronged approach that includes technical solutions, public awareness campaigns, and the enforcement of existing laws.

The impact of "mrdeepfack" extends beyond individual cases, posing a threat to the integrity of information and the democratic process. The spread of misinformation, amplified by social media and other online platforms, can undermine trust in institutions, erode public discourse, and influence elections. The ability to create convincing narratives, even if based on fabricated information, can sway public opinion and manipulate political outcomes. The vulnerabilities in existing information ecosystems, from news organizations to social media platforms, are being actively exploited. Addressing these threats requires a collective effort, involving governments, technology companies, media organizations, and the public at large. Strengthening media literacy, promoting fact-checking initiatives, and holding platforms accountable for the content they host are all vital steps.

Combating the activities associated with "mrdeepfack" requires a multifaceted approach. Developing advanced detection technologies, educating the public, and strengthening legal frameworks are all essential. Detection tools are being developed that use a variety of techniques, including analyzing video and audio for anomalies, detecting inconsistencies in facial expressions and lip movements, and identifying patterns of manipulation. Public awareness campaigns are crucial to educating people about the risks and promoting critical thinking skills. Strengthening legal frameworks is necessary to hold perpetrators accountable and deter future attacks. The development of international cooperation and standardized legal frameworks is crucial to effectively address the transnational nature of these threats. The ongoing dialogue between technologists, policymakers, and the public is essential to navigate this rapidly evolving landscape.

The future of digital information is inextricably linked to the evolving threat posed by "mrdeepfack." As technology continues to advance, the sophistication and prevalence of manipulated content will likely increase. Anticipating and adapting to these changes is crucial. The focus will be on the development of more advanced detection technologies, the promotion of media literacy, and the enforcement of stricter regulations on the creation and dissemination of manipulated content. The role of artificial intelligence in both creating and combating deepfakes will likely become even more prominent. The ethical considerations surrounding the use of AI in this context will become increasingly important. The public must actively cultivate critical thinking skills and remain vigilant, recognizing the potential for manipulation and questioning the authenticity of online information.